The Imperative for Knowledge Grounding in Large Language Models

The advent of Large Language Models (LLMs) has marked a revolutionary milestone in artificial intelligence, demonstrating remarkable capabilities in natural language understanding and generation.1 However, the very architecture that grants them their fluency also imposes fundamental limitations. These inherent constraints, namely the static nature of their knowledge and their propensity for factual invention, have created a critical need for a framework that can ground these models in verifiable, real-world information. Retrieval-Augmented Generation (RAG) has emerged as the definitive solution to this challenge, transforming LLMs from powerful but sometimes unreliable systems into trustworthy, enterprise-ready tools.2

The Parametric Knowledge Boundary

The knowledge of a standard LLM is “parametric,” meaning it is encoded entirely within the model’s internal parameters (its weights and biases) during a finite training period.4 This training process relies on a massive but static dataset, which becomes frozen in time upon the model’s completion. This creates a “knowledge cutoff,” an informational event horizon beyond which the model has no awareness.3 Consequently, when queried about events, discoveries, or data that emerged after its training concluded, an LLM is fundamentally incapable of providing an informed response and may instead generate outdated or inaccurate information.8

This limitation is particularly acute in dynamic enterprise environments where the freshness and relevance of information are paramount for decision-making.5 Furthermore, general-purpose LLMs, trained on broad public data, inherently lack depth in specialized domains and have no access to private or proprietary organizational knowledge. This data is inaccessible during training due to its confidential nature or because it is too niche to be included in a general corpus, rendering the LLM ineffective for many internal business use cases.8

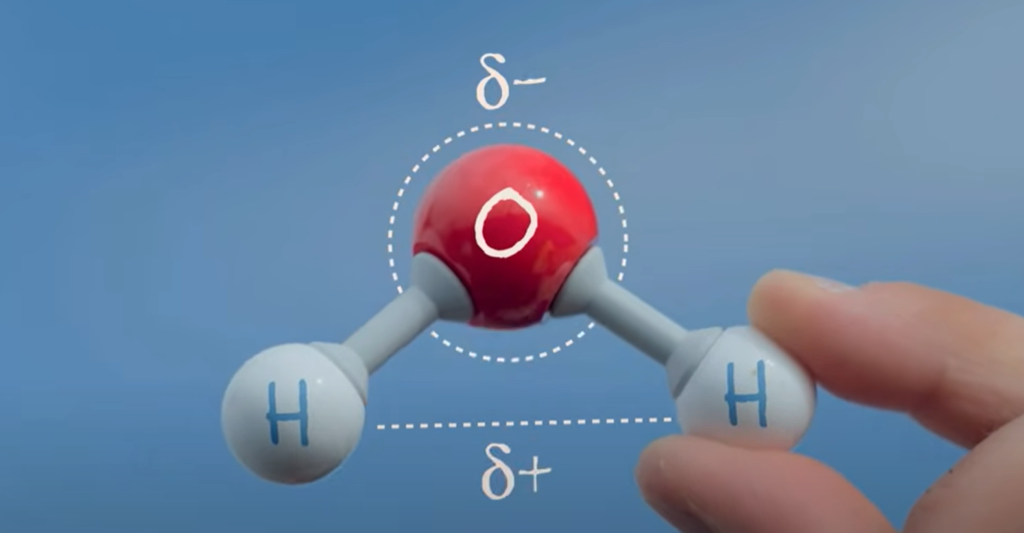

The Challenge of AI Hallucination

A direct consequence of the parametric knowledge boundary is the phenomenon of “hallucination,” also known as confabulation. This is defined as the tendency of an LLM to generate responses that are plausible-sounding, coherent, and confidently delivered but are factually incorrect or entirely fabricated.3 This behavior stems from several root causes. When faced with a query that falls into a gap in its training data or lies beyond its knowledge cutoff, the model attempts to extrapolate from learned patterns, essentially making an educated guess that prioritizes linguistic plausibility over factual truth.6 At its core, an LLM is a pattern-prediction engine, not a truth-seeking one; it lacks any native mechanism to fact-check its own output against external, real-time sources.6

While amusing in casual contexts, hallucinations represent a significant liability in professional settings. The framing of this issue has evolved from a purely technical limitation to a critical business risk. In academic literature, hallucination is often described as a model flaw.3 However, in enterprise and industry contexts, the focus shifts to the severe consequences of this flaw: the spread of misinformation, the potential leakage of sensitive data patterns, and the reputational damage that follows.1 In high-stakes “Your Money or Your Life” (YMYL) domains such as healthcare, finance, and legal services, a single hallucinated response can have dire consequences.1 This reframing of hallucination from a “bug” to a “risk” explains the strategic imperative behind RAG’s rapid adoption. RAG is not merely a tool for improving performance; it is a foundational component of responsible AI, providing a mechanism for governance, auditability, and risk mitigation by ensuring that AI-generated content is traceable to a verifiable source.1

Introducing Retrieval-Augmented Generation (RAG): The Solution Framework

Retrieval-Augmented Generation is an AI framework specifically designed to address these fundamental LLM limitations.9 The core principle of RAG is to synergistically combine the LLM’s powerful, intrinsic parametric knowledge with the vast, dynamic, and non-parametric information stored in external knowledge bases.4 The framework operates by redirecting the LLM’s process. Instead of immediately generating a response from its internal memory, a RAG system first retrieves relevant, up-to-date, and authoritative information from a specified external data source.5 This retrieved context is then provided to the LLM along with the original query, effectively “grounding” the model’s subsequent output in verifiable facts.6 This paradigm shift enhances the accuracy, credibility, and timeliness of LLM-generated content, transforming them into more reliable and valuable assets for knowledge-intensive tasks.1

The Anatomy of a Retrieval-Augmented Generation System

A RAG system is not a monolithic entity but a sophisticated pipeline composed of distinct offline and online phases. The offline phase involves preparing the knowledge base for efficient search, while the online phase executes in real-time to answer a user’s query. Understanding this anatomy is crucial for building and optimizing robust RAG applications.

Architectural Overview and Diagram

The RAG pattern is composed of two main processes: the “Ingestion” or “Indexing” process, which happens offline to prepare the data, and the “Inference” or “Query” process, which happens in real-time when a user asks a question.27 The following diagram illustrates the complete end-to-end architecture.

Code snippet

graph TD

subgraph “Offline: Data Ingestion & Indexing Pipeline”

A –> B(Load & Chunk);

B –> C{Embedding Model};

C –> D;

end

subgraph “Online: Real-time Inference Pipeline”

E[User Query] –> F{Embedding Model};

F –> G(Similarity Search);

D –> G;

G –> H(Augmented Prompt <br>);

H –> I{LLM <br> (Generator)};

I –> J;

end

style A fill:#D6EAF8,stroke:#333,stroke-width:2px

style D fill:#D1F2EB,stroke:#333,stroke-width:2px

style E fill:#FEF9E7,stroke:#333,stroke-width:2px

style J fill:#E8F8F5,stroke:#333,stroke-width:2px

Architectural Flow Description:

- Data Ingestion (Offline): The process begins by sourcing data from various external locations, such as document repositories, databases, or APIs.66 This data is loaded, cleaned, and broken down into smaller, semantically coherent “chunks”.20 Each chunk is then processed by an embedding model, which converts the text into a numerical vector representation.20 These vector embeddings are stored and indexed in a specialized vector database, creating a searchable knowledge library.20

- Inference (Online): When a user submits a query, the real-time pipeline is activated.66 The user’s query is converted into a vector embedding using the same model from the ingestion phase.8 The system then performs a similarity search in the vector database to find the indexed data chunks that are most semantically relevant to the query.20 This retrieved information is then combined with the original user query to create an “augmented prompt”.8 This enriched prompt, now containing both the question and the factual context, is sent to the LLM (the generator), which synthesizes the information to produce a final, factually grounded response.19

The Data Ingestion and Indexing Pipeline (The “Offline” Phase)

Before a RAG system can answer any questions, its external knowledge must be meticulously prepared and indexed. This process is foundational to the system’s ultimate performance.

- Document Loading and Preprocessing: The process begins by sourcing data from a variety of locations, which can include document repositories, databases, or APIs.17 This raw data is then preprocessed, a cleaning step that may involve removing stop words, normalizing text, and eliminating duplicate information to ensure the quality of the knowledge base.9

- Chunking Strategies: Since LLMs have finite context windows and operate more effectively on focused pieces of text, large documents must be broken down into smaller, semantically coherent “chunks”.3 The choice of chunking strategy is a critical design decision. Common approaches include fixed-size chunking (e.g., every 256 tokens), sentence-based chunking, or more advanced custom methods that respect the logical structure of a document (e.g., breaking at sections or paragraphs).19

- Embedding Generation: Each text chunk is then passed through an embedding model, typically a transformer-based model like BERT.21 This model converts the text into a high-dimensional numerical vector, known as a vector embedding.17 This embedding captures the semantic meaning of the chunk, allowing the system to search for information based on conceptual similarity rather than simple keyword matching.3

- Vector Databases and Indexing: The generated vector embeddings are stored and indexed in a specialized vector database.3 These databases, such as Faiss, Qdrant, or ChromaDB, are highly optimized for performing efficient vector similarity searches, enabling the system to rapidly find the chunks whose semantic meaning is closest to that of a user’s query.20

The Core RAG Workflow: From Query to Response (The “Online” Phase)

Once the knowledge base is indexed, the system is ready to handle user queries through a real-time, multi-phase workflow.

Phase 1: The Retriever

The retriever’s responsibility is to find the most relevant pieces of information from the indexed knowledge base.

- Query Encoding: When a user submits a query, it is transformed into a vector embedding using the exact same embedding model that was used to process the documents.3 This ensures that the query and the documents exist in the same semantic vector space, making comparison possible.

- Similarity Search: The retriever then executes a similarity search within the vector database. It calculates the “distance” between the query vector and all the chunk vectors in the index, identifying the top-K chunks that are semantically closest to the query.3 Techniques like Approximate Nearest Neighbor (ANN) search are often used to make this process efficient even with billions of vectors.7

- Ranking and Filtering: The retrieved chunks are ranked by their relevance score, and typically only a small number of the highest-ranked documents (e.g., the top 5 or 10) are passed to the next stage.21

Phase 2: The Augmentation

In this phase, the retrieved information is prepared for the LLM.

- Contextual Prompt Engineering: The content of the top-ranked document chunks is synthesized with the user’s original query to create a new, “augmented” prompt.3 This technique, sometimes called “prompt stuffing,” provides the LLM with the necessary external context, instructing it to formulate an answer based on the provided facts.7

Phase 3: The Generator

This is the final stage where the LLM produces the answer.

- Response Generation: The augmented prompt is sent to the generator, which is a powerful LLM such as GPT-4 or Llama2.20 The LLM uses its advanced language capabilities to synthesize a coherent, well-formed, and contextually relevant response that integrates the information from the retrieved chunks with its own internal knowledge.2

The RAG pipeline functions as a series of compounding decisions, where choices made in the initial stages have a disproportionate and non-linear impact on the final output quality. A common failure point in RAG systems is poor retrieval quality, where the system fetches irrelevant or incomplete context.3 The root cause of this failure often lies not with the sophisticated retrieval algorithm but with the seemingly mundane data preparation steps. For instance, if a critical piece of information is inadvertently split across two separate chunks during the initial processing, no retrieval algorithm can recover that complete semantic unit. This creates a cascade of failure: poor chunking leads to fragmented embeddings, which prevents a successful semantic match, resulting in poor retrieval, which ultimately causes the LLM to receive inadequate context and generate a hallucinated or irrelevant answer. This demonstrates that the overall quality of a RAG system is dictated not by its most advanced component (the LLM) but by its “weakest link,” which is frequently the data ingestion and chunking stage. Therefore, a strategic investment in optimizing document analysis and chunking strategies often yields a far greater return on investment in final answer quality than simply upgrading the generator model.19

Post-Processing and Verification

In many production-grade RAG systems, an optional but highly valuable final step is post-processing.21 This can involve fact-checking the generated response against the source documents, summarizing long answers for brevity, or formatting the output for better readability. Crucially, this stage often includes adding citations or references that link the generated statements back to the specific source documents from which the information was retrieved. This feature is fundamental to building user trust, as it provides transparency and allows users to verify the information for themselves.7

The Evolution of RAG Paradigms

The field of Retrieval-Augmented Generation has evolved rapidly, moving from simple, linear pipelines to highly sophisticated, modular, and adaptive architectures. This progression reflects a growing ambition to not only provide LLMs with knowledge but also to imbue them with more effective strategies for finding and using that knowledge. This evolution can be broadly categorized into three paradigms: Naive, Advanced, and Modular RAG.3

Naive RAG

Naive RAG represents the foundational architecture, often described as a “retrieve-then-read” or “index-retrieve-generate” process.3 This is the classic workflow detailed in the previous section, which gained widespread popularity with the advent of powerful generative models like ChatGPT.29 While effective, this straightforward approach is beset by several limitations that spurred the development of more advanced techniques:

- Retrieval Challenges: The simple retrieval mechanism often struggles with precision and recall. It can retrieve chunks that are only tangentially related to the query’s intent or, conversely, miss crucial pieces of information that use different terminology (the “vocabulary mismatch” problem).3

- Generation Difficulties: The quality of the final response is highly dependent on the quality of the retrieved context. If the context is noisy, irrelevant, or contradictory, the generator is prone to hallucination or producing irrelevant and biased outputs.3

- Augmentation Hurdles: There is often a challenge in seamlessly integrating the retrieved text snippets with the LLM’s own generative flow, which can result in responses that feel disjointed, repetitive, or stylistically inconsistent.3

Advanced RAG

The Advanced RAG paradigm introduces crucial optimization steps both before and after the core retrieval phase, aiming to enhance the quality and relevance of the context provided to the LLM.4 These enhancements primarily focus on two areas:

- Pre-retrieval Optimization: These strategies focus on refining the input to the retriever. A key technique is query transformation or query rewriting. Here, the initial user query is analyzed and modified to be more specific, to fix spelling errors, or to align better with the language and structure of the knowledge base, thereby improving the chances of a successful retrieval.4

- Post-retrieval Optimization: These strategies focus on refining the output of the retriever. The most significant technique is re-ranking. In this step, the initial list of top-K documents from the retriever is passed to a second, more powerful model (often a cross-encoder). This re-ranker performs a more fine-grained evaluation of the semantic relevance between the query and each document, re-ordering the list to push the most relevant documents to the top. This acts as a critical filter, reducing noise and ensuring the generator receives the highest-quality context possible.2

Modular RAG

Modular RAG marks a significant architectural shift away from a rigid, linear pipeline towards a more flexible and powerful framework composed of interconnected, specialized modules.4 This approach allows for greater adaptability and the integration of more complex functionalities. A modular RAG system can include various interchangeable or additional components, such as:

- A search module that can employ multiple retrieval strategies (e.g., vector search, keyword search, graph-based search) and query different sources.4

- A memory module that retains the history of a conversation, enabling the system to handle multi-turn dialogues and follow-up questions effectively.32

- A reasoning module capable of performing multi-step retrieval. This mimics a human research process, where the findings from an initial retrieval are used to formulate a new, more specific query for a subsequent retrieval step, allowing the system to tackle complex questions that require synthesizing information from multiple sources.32

This modularity not only enhances the system’s capabilities but also allows for the seamless integration of complementary AI technologies, such as fine-tuning specific components or using reinforcement learning to optimize the retrieval strategy over time.4

The evolutionary path from Naive to Advanced and finally to Modular RAG is more than just an increase in architectural complexity; it mirrors a progression in cognitive capability. Naive RAG is analogous to a student answering a question by looking up a single fact in an encyclopedia—a simple, one-step recall process.3 Advanced RAG is like a more diligent student who first pauses to consider the best way to phrase their search query (query rewriting) and then, after gathering several sources, quickly skims them to identify the most promising one before reading in-depth (re-ranking).4 The process is still linear but incorporates steps for quality control. Modular RAG represents a significant cognitive leap, akin to a researcher tackling a complex problem. They might perform an initial broad search, use those findings to conduct a more targeted follow-up search (multi-step retrieval), consult different types of sources (modular search), and remember what they have already learned (memory).32 This trajectory demonstrates a clear shift from a simple tool that

fetches information to a more sophisticated system that strategizes about how to find, evaluate, and synthesize it, laying the conceptual foundation for the highly autonomous Agentic RAG systems.

Strategic Implementation: RAG vs. Fine-Tuning

When seeking to adapt a general-purpose LLM for a specific business need, organizations face a critical strategic choice between two primary customization techniques: Retrieval-Augmented Generation (RAG) and fine-tuning. While both aim to enhance model performance and deliver domain-specific responses, they operate through fundamentally different mechanisms and present distinct trade-offs in terms of cost, complexity, data privacy, and knowledge management.33 Understanding these differences is essential for making an informed architectural decision.

A Comparative Framework

The choice between RAG and fine-tuning can be understood through a simple analogy: RAG is like giving a generalist cook a new, specialized recipe book to use for a specific meal, while fine-tuning is like sending that cook to an intensive culinary course to become a specialist in a particular cuisine.33

- Core Mechanism: RAG augments an LLM’s knowledge externally at inference time. It provides relevant information as context within the prompt, but it does not alter the LLM’s underlying parameters.33 In contrast, fine-tuning directly modifies the LLM’s internal knowledge by continuing the training process on a curated, domain-specific dataset. This process adjusts the model’s internal weights, effectively teaching it a new skill or dialect.34

- Knowledge Incorporation: RAG is exceptionally well-suited for incorporating dynamic, volatile, or rapidly changing information. To update the system’s knowledge, one only needs to update the external documents in the knowledge base—a relatively simple and fast process.9 Fine-tuning is better for teaching the model implicit knowledge, such as a specific style, tone, or the nuanced vocabulary of a specialized field like medicine or law. This knowledge becomes embedded in the model but is static once training is complete.34

- Data Privacy and Security: RAG generally offers a more secure posture for handling sensitive data. Proprietary information can be kept in a secure, on-premises database and is only accessed at runtime for a specific query. The data is used as context but is not absorbed into the model’s parameters.1 Fine-tuning, however, requires exposing the model to this proprietary data during the training phase, which can pose a security or privacy risk depending on the data’s sensitivity and the deployment environment.1

- Cost, Time, and Resources: RAG has a lower barrier to entry, requiring primarily coding and data infrastructure skills, making the initial implementation less complex and costly.17 However, it introduces additional computational overhead and latency for every query processed at runtime.34 Fine-tuning is a resource-intensive endeavor, demanding significant upfront investment in compute infrastructure (GPU clusters), time, and specialized AI/ML expertise in areas like deep learning and NLP.33 Once a model is fine-tuned, however, its runtime performance is efficient and requires no additional overhead per query.34

- Hallucination and Verifiability: RAG is a powerful tool for mitigating hallucinations because it grounds the LLM’s responses in retrieved factual evidence. Crucially, it enables the system to cite its sources, making outputs transparent and verifiable by the end-user.7 Fine-tuning can reduce hallucinations on topics within its specialized domain but is still susceptible to making errors on unfamiliar queries and does not have an inherent mechanism for providing source citations.35

| Criterion | Retrieval-Augmented Generation (RAG) | Fine-Tuning |

| Primary Goal | To provide the LLM with up-to-date, factual, or proprietary knowledge at the time of response generation. | To adapt the LLM’s core behavior, style, tone, or understanding of a specialized domain’s language. |

| Knowledge Mechanism | External and non-parametric. Knowledge is retrieved from an external database and supplied in the prompt at runtime. The model’s weights are not changed.34 | Internal and parametric. Knowledge is integrated into the model’s weights through continued training on a domain-specific dataset.35 |

| Data Freshness | Excellent for dynamic data. Knowledge can be updated in real-time by simply modifying the external data source.14 | Static. The model’s knowledge is fixed at the time of training. Incorporating new information requires retraining.35 |

| Cost Profile | Lower upfront cost and complexity. Higher runtime cost due to the added retrieval step for each query.34 | High upfront cost for data curation, compute resources (GPUs), and specialized skills. Efficient runtime with no extra overhead.34 |

| Data Privacy | High. Sensitive data can remain in a secure, isolated database and is not absorbed into the model.1 | Lower. Requires exposing the model to proprietary data during the training process, which may be a security concern.1 |

| Verifiability | High. Enables citation of sources, allowing users to verify the factual basis of the generated response.14 | Low. Does not inherently provide a mechanism to trace generated information back to a specific source document. |

| Key Weakness | Adds latency to each query. The quality of the response is highly dependent on the quality of the retrieval.28 | Can be prone to “catastrophic forgetting” of general knowledge. Requires significant technical expertise and resources to implement.33 |

| Required Skills | Data engineering, data architecture, and coding skills are primary.34 | Deep learning, NLP, model configuration, and MLOps expertise are required, in addition to data skills.34 |

Hybrid Architectures: The Best of Both Worlds

It is crucial to recognize that RAG and fine-tuning are not mutually exclusive; in fact, they can be powerfully complementary.4 A hybrid architecture represents a state-of-the-art approach to LLM customization. In this model, an LLM is first

fine-tuned to master the specific vocabulary, tone, and implicit reasoning patterns of a domain. This specialized model is then deployed within a RAG framework, which provides it with real-time, factual information from an external knowledge base. This approach combines the “how” (the specialized style and understanding from fine-tuning) with the “what” (the up-to-date facts from RAG), resulting in responses that are not only factually accurate and current but also stylistically appropriate and contextually nuanced.34

The Frontier of RAG: Advanced Techniques and Architectures

As the adoption of RAG has grown, so has the research into overcoming its limitations. The frontier of RAG is characterized by a move away from simple, linear pipelines toward more dynamic, reflective, and intelligent systems. These advanced techniques aim to improve every stage of the RAG process, from how queries are understood to how information is retrieved, evaluated, and synthesized.

Enhancing the Retrieval and Ranking Phases

The quality of retrieval is a primary determinant of RAG’s success. Advanced techniques focus on ensuring the context provided to the generator is as precise and relevant as possible.

- Hybrid Search: This technique addresses the shortcomings of using a single retrieval method by combining the strengths of multiple approaches. It typically fuses traditional keyword-based search (sparse retrieval, e.g., BM25), which excels at matching specific terms and entities, with modern semantic vector search (dense retrieval), which excels at understanding conceptual meaning and user intent. This combination leads to more robust and comprehensive retrieval results that capture both lexical and semantic relevance.9

- Re-ranking Models: Re-ranking introduces a second, more meticulous evaluation stage after the initial retrieval. While the first-pass retriever is optimized for speed and recall (finding a broad set of potentially relevant documents), the re-ranker is optimized for precision. A more powerful but computationally intensive model, such as a BERT-based cross-encoder, takes the top candidates from the retriever and performs a deep, pairwise comparison with the query. It then re-orders these candidates, pushing the most semantically relevant documents to the top. This is a critical step for filtering out noise and maximizing the quality of the context sent to the generator, trading a small increase in latency for a significant boost in final accuracy.2

Iterative and Reflective Architectures

A major innovation in RAG is the introduction of self-reflection and iteration, allowing the system to assess and correct its own processes.

- Self-RAG (Self-Reflective RAG): This framework endows a single LLM with the ability to control its own retrieval and generation process through self-reflection. It uses special “reflection tokens” to make several key decisions on-demand: (1) whether retrieval is necessary at all for a given query, (2) assessing the relevance of any retrieved passages, and (3) critiquing its own generated output to check if it is factually supported by the provided evidence.29 This adaptive approach makes the model’s reasoning more transparent and allows its behavior to be tailored to specific task requirements.40

- Corrective RAG (CRAG): This technique is designed to improve RAG’s robustness, particularly when the initial retrieval yields poor results. CRAG introduces a lightweight “retrieval evaluator” that grades the quality of the retrieved documents against the query. If the documents are deemed irrelevant or incorrect, CRAG triggers a corrective action. This could involve discarding the faulty documents and initiating a web search to find more reliable information, or decomposing and refining documents that are correct but contain irrelevant noise.29 This prevents the generator from being misled by poor context.

- Adaptive RAG: This framework introduces a layer of intelligence that dynamically selects the most efficient retrieval strategy based on the query’s complexity. A classifier model first analyzes the user’s question and routes it down an appropriate path: simple queries may be answered directly by the LLM with no retrieval; moderately complex queries may trigger a standard, single-step retrieval; and highly complex queries may activate an iterative, multi-step retrieval process.45 This balanced approach conserves computational resources for simple tasks while dedicating more powerful methods to challenging ones.47

Structural and Agentic Innovations

The most advanced RAG architectures rethink the structure of the knowledge base and the nature of the system itself, moving towards autonomous agents.

- Long-Context RAG (e.g., Long RAG, RAPTOR): These techniques tackle the challenge of processing very long documents, where standard chunking can lose critical context. Long RAG is designed to work with larger retrieval units, such as entire document sections.42 RAPTOR (Recursive Abstractive Processing for Tree-Organized Retrieval) creates a hierarchical tree of summaries for a document, allowing retrieval to occur at multiple levels of abstraction, from fine-grained details to high-level concepts.48

- GraphRAG: This approach leverages structured knowledge graphs as the external data source. Instead of retrieving unstructured text chunks, GraphRAG retrieves nodes and their relationships (subgraphs). This structure is ideal for answering complex questions that require multi-hop reasoning—connecting disparate pieces of information through their explicit relationships—a task that is notoriously difficult with unstructured text alone.7

- Agentic RAG: This represents the current apex of RAG evolution, where the RAG pipeline is integrated as a tool to be used by autonomous AI agents. These agents can orchestrate complex, multi-step tasks by leveraging RAG within a broader reasoning framework. Key patterns of Agentic RAG include 52:

- Planning: Decomposing a complex user request into a logical sequence of sub-tasks.

- Tool Use: Interacting with various external tools, including the RAG retriever, APIs, or code interpreters, to gather information and perform actions.

- Reflection: Evaluating the results of their actions and the quality of their generated outputs to self-correct and refine their approach.

- Multi-Agent Collaboration: Multiple specialized agents working together, each potentially equipped with its own RAG system, to solve a complex problem.

| Technique | Core Idea | Problem Solved | Key Limitation(s) |

| Self-RAG | The LLM learns to control its own retrieval and critique its own output using special “reflection tokens”.40 | Reduces unnecessary retrieval for simple queries and improves factual grounding by forcing self-assessment.42 | Requires specialized training of the LLM to generate and understand reflection tokens; adds complexity to the training pipeline.53 |

| Corrective RAG (CRAG) | A retrieval evaluator grades retrieved documents and triggers corrective actions (e.g., web search) if they are irrelevant.43 | Improves robustness when initial retrieval is poor, preventing the generator from being misled by bad context.43 | Adds latency due to the evaluation and potential web search steps; effectiveness depends on the quality of the evaluator model.44 |

| Adaptive RAG | A classifier dynamically routes queries to different processing paths (no retrieval, single-step, multi-step) based on complexity.46 | Balances performance and cost by applying computationally expensive methods only when necessary for complex queries.45 | The system’s performance is dependent on the accuracy of the initial query classifier; misclassification can lead to suboptimal processing.46 |

| Agentic RAG | Autonomous agents use RAG as one of many tools in a planned, multi-step reasoning process involving reflection and collaboration.52 | Handles highly complex, dynamic tasks that require more than just question-answering, such as workflow automation or research analysis.31 | Significantly increases system complexity, orchestration challenges, and potential for cascading errors between agent steps.52 |

| GraphRAG | Retrieves information from a structured knowledge graph instead of unstructured text, leveraging entity relationships.50 | Excels at multi-hop reasoning and answering questions about relationships between entities that are hard to infer from plain text.7 | Requires a high-quality, well-maintained knowledge graph, which can be expensive and complex to create and update.50 |

| Long RAG / RAPTOR | Processes documents in larger, more coherent chunks or creates hierarchical summary trees to preserve context.42 | Mitigates context fragmentation and information loss that occurs with small, fixed-size chunking of long documents.42 | Can increase the amount of context fed to the LLM, potentially hitting context window limits or introducing more noise if not managed well. |

RAG in Practice: Applications and Industry Impact

The theoretical advancements in RAG have translated into tangible, high-impact applications across a wide array of industries. RAG is proving to be a “last-mile” technology, bridging the gap between the powerful reasoning capabilities of LLMs and the vast repositories of proprietary, unstructured data that organizations have accumulated for decades. By activating this dormant institutional knowledge, RAG provides an immediate and compelling return on investment.

Enterprise Knowledge Management & Internal Tools

One of the most immediate and widespread applications of RAG is in revolutionizing internal knowledge management. Enterprises often possess vast, siloed repositories of information in the form of technical documentation, company policies, HR guidelines, and historical project data.5 RAG-powered chatbots and search engines can act as intelligent assistants, allowing employees to ask natural language questions and receive precise, context-aware answers drawn directly from these internal sources.32

- Example: Bell Canada has deployed a modular RAG system to enhance its internal knowledge management processes, ensuring employees have access to the most up-to-date company information.55

- Example: LinkedIn developed a novel system combining RAG with a knowledge graph to power its internal customer service helpdesk, successfully reducing the median time to resolve issues by over 28%.55

- Example: Project management tool Asana leverages RAG to provide users with intelligent insights based on their project data.56

Customer Service and Support

RAG is transforming customer service by enabling the creation of highly capable automated support agents. These virtual assistants can provide accurate, personalized, and up-to-the-minute responses by retrieving information from product manuals, troubleshooting guides, FAQs, and customer interaction histories.1 This not only improves customer satisfaction by providing instant answers but also frees up human agents to handle more complex issues.

- Example: DoorDash built a sophisticated RAG-based chatbot for its delivery contractors (“Dashers”). The system includes a “guardrail” component to monitor and ensure the accuracy and policy-compliance of every generated response.55

Specialized Professional Domains

In fields where accuracy and access to specific, dense information are paramount, RAG serves as a powerful co-pilot for professionals.

- Legal: RAG systems are used to accelerate legal research by rapidly sifting through immense volumes of case law, statutes, and legal precedents to find relevant information for drafting documents or analyzing cases.10 LexisNexis is one company applying RAG for advanced legal analysis.56

- Finance: Financial analysts use RAG to synthesize real-time market data, breaking news, and company reports to generate timely insights, forecasts, and investment recommendations.56 The Bloomberg Terminal is a prominent example of a financial tool that uses RAG to deliver market insights.56

- Healthcare: RAG assists clinicians in making more informed decisions by retrieving information from the latest medical research, patient health records, and established clinical guidelines to suggest diagnoses or formulate personalized treatment plans.6 IBM Watson Health utilizes RAG for this purpose.56

Content and Code Generation

RAG enhances both creative and technical generation tasks by grounding them in factual, relevant data. This applies to marketing content creation, SEO optimization, drafting tailored emails, and summarizing meetings.25

- Example: Content creation platform Jasper uses RAG to ensure its generated articles are accurate and contextually aware.56

- Example: Grammarly employs RAG to analyze the context of an email exchange and suggest appropriate adjustments to tone and style.56

- Example: In software development, RAG-powered tools assist programmers by retrieving code snippets and usage examples from the most recent versions of libraries and APIs, improving developer productivity and reducing errors.56

Challenges, Risks, and Mitigation Strategies

Despite its transformative potential, implementing a robust and reliable RAG system is a complex engineering endeavor fraught with challenges. A critical understanding of these potential failure points, security risks, and the nuances of evaluation is essential for successful deployment.

Retrieval Quality and Context Limitations

The core of RAG’s effectiveness lies in its retriever, making retrieval quality the system’s most critical vulnerability. The principle of “garbage in, garbage out” applies with particular force; if the retriever provides poor context, the generator will produce a poor response.

- The “Needle in a Haystack” Problem: This encompasses several related failure modes.28 The system might fail due to

missing content, where the answer is not present in the knowledge base, yet the LLM hallucinates a response instead of stating its ignorance.58 It can also fail due to

low precision or recall, where the retriever fetches irrelevant or incomplete documents, or due to suboptimal ranking, where the correct document is found but not ranked highly enough to be included in the final context.3 - Context Length Limitations: LLMs operate with a fixed-size context window. If the retrieval process returns too many documents, or if the relevant documents are exceedingly long, critical information can be truncated and lost during the augmentation phase. This can starve the generator of the very details it needs to form a complete and accurate answer.28

System Performance and Complexity

Introducing a retrieval loop necessarily adds layers of complexity and potential performance bottlenecks.

- Latency: Each query in a RAG system requires at least one round-trip to a database, followed by the LLM’s generation time. Advanced techniques like re-ranking or multi-step retrieval add further steps, increasing the overall response time (latency). This can be a significant issue for real-time, interactive applications.13

- Computational Cost and Complexity: Building, deploying, and maintaining the full RAG stack—including data ingestion pipelines, vector databases, and continuous update processes—is a non-trivial engineering task that can be computationally expensive and resource-intensive, especially as the knowledge base scales.13

Security and Trustworthiness

By connecting an LLM to an external data source, RAG introduces a new attack surface and new considerations for data governance.

- Adversarial Attacks and Data Poisoning: The external knowledge base can be targeted by malicious actors. Research has demonstrated attacks like POISONCRAFT, where an attacker injects fraudulent information into the data source. This can “poison” the system, causing the RAG model to retrieve and cite misleading information or fraudulent websites, thereby compromising its integrity.61

- Data Reliability and Bias: The RAG system is fundamentally dependent on the quality of its knowledge source. If the source data is unreliable, biased, or outdated, the generated outputs will inherit these flaws.59 A significant challenge is also how to handle contradictory information when documents retrieved from multiple sources disagree.58

- Privacy and Security: When the knowledge base contains sensitive or personally identifiable information (PII), implementing robust security measures is paramount. This includes strict access controls, data anonymization techniques, and encryption to prevent unauthorized data exposure.59

Evaluation and Monitoring

Evaluating the performance of a RAG system is notoriously difficult due to its hybrid nature, the interplay between its components, and the dynamic state of its knowledge base.2 A comprehensive evaluation framework is necessary to measure and improve system quality, yet the field currently lacks a unified, standard paradigm.2 Effective evaluation requires assessing the retrieval and generation components both independently and holistically.

| Component | Metric | Definition | Question it Answers |

| Retrieval | Context Precision | The proportion of retrieved documents that are relevant to the query. | “Are the retrieved documents actually useful for answering the question?” 2 |

| Context Recall | The proportion of all relevant documents in the knowledge base that were successfully retrieved. | “Did the retriever find all the necessary information to answer the question completely?” 2 | |

| Generation | Faithfulness | The degree to which the generated answer is factually consistent with the information presented in the retrieved context. | “Is the model making things up, or is it sticking to the provided facts?” 2 |

| Answer Relevancy | The degree to which the generated answer directly addresses the user’s original query and intent. | “Did the model actually answer the user’s question?” 2 | |

| Answer Correctness | The factual accuracy of the generated answer when compared against a ground truth or sample response. | “Is the information in the final answer correct?” 2 |

Conclusion and Future Trajectory

Synthesis of Findings

Retrieval-Augmented Generation has fundamentally altered the trajectory of applied artificial intelligence. It addresses the most critical vulnerabilities of Large Language Models—their static knowledge and tendency to hallucinate—by grounding them in external, verifiable facts. RAG transforms LLMs from being solely creative text generators into powerful, enterprise-ready reasoning engines capable of delivering accurate, timely, and trustworthy responses. Its core value proposition lies in its ability to enhance factual accuracy, provide auditable verifiability through citations, ensure informational currency, and enable robust data governance. By mitigating the primary risks associated with deploying LLMs in high-stakes environments, RAG has become an indispensable component of the modern AI technology stack.

Future Research Directions

The evolution of RAG is far from over. The future trajectory of the technology points toward greater capability, robustness, and integration. Key areas of ongoing research and development include:

- Modality Extension: The principles of RAG are being extended beyond text to encompass multimodal data. Future systems will be able to retrieve and synthesize information from a combination of text, images, audio, and video, enabling a more holistic understanding of complex queries.4

- Enhanced Reasoning: There is a strong push to develop more sophisticated multi-hop and multi-step reasoning capabilities. This will allow RAG systems to tackle increasingly complex questions that require synthesizing evidence across numerous documents and logical steps, moving closer to human-like research and analysis.4

- Improving Robustness and Trustworthiness: As RAG systems become more critical, research into fortifying them against adversarial attacks like data poisoning, mitigating inherent biases from data sources, and developing more comprehensive and standardized evaluation frameworks will be crucial for ensuring their reliability and safety.4

- The RAG Technical Stack and Ecosystem: The maturation of the field is leading to the development of a robust ecosystem of tools, platforms, and services. Frameworks like LangChain and LlamaIndex, along with “RAG-as-a-Service” offerings from major cloud providers, are simplifying the development process and accelerating the adoption of RAG across industries.4

Ultimately, the future of RAG appears to be a convergence with the broader field of Agentic AI. The clear evolutionary trend from Naive to Advanced and Modular RAG demonstrates a consistent move towards greater autonomy and flexibility.3 Advanced techniques like Self-RAG and Adaptive RAG introduce decision-making capabilities

within the RAG pipeline itself—the system learns to ask, “Should I retrieve information now?” or “Is this retrieved document good enough?”.40 Agentic RAG takes this a logical step further by externalizing this decision-making process. In an agentic architecture, RAG ceases to be the entire application; instead, it becomes a specialized “tool” that an autonomous agent can choose to use, ignore, or configure on the fly as part of a larger, more complex plan.31 This suggests a paradigm shift where the focus of innovation will move from optimizing the internal workings of the RAG pipeline to optimizing an agent’s ability to reason about when and how to deploy that pipeline to achieve a goal. The trajectory is toward the commodification and abstraction of RAG, positioning it as a fundamental, callable service in the toolkit of next-generation intelligent agents.

Works cited

- 5 key features and benefits of retrieval augmented generation (RAG) | The Microsoft Cloud Blog, accessed on June 22, 2025, https://www.microsoft.com/en-us/microsoft-cloud/blog/2025/02/13/5-key-features-and-benefits-of-retrieval-augmented-generation-rag/

- Retrieval Augmented Generation Evaluation in the Era of Large Language Models: A Comprehensive Survey – arXiv, accessed on June 22, 2025, https://arxiv.org/html/2504.14891v1

- Retrieval-Augmented Generation for Large Language … – arXiv, accessed on June 22, 2025, https://arxiv.org/pdf/2312.10997

- Retrieval-Augmented Generation for Large Language Models: A Survey – arXiv, accessed on June 22, 2025, https://arxiv.org/html/2312.10997v2

- RAG for LLMs: Smarter AI with retrieval-augmented generation – Glean, accessed on June 22, 2025, https://www.glean.com/blog/rag-for-llms

- The Science Behind RAG: How It Reduces AI Hallucinations – Zero Gravity Marketing, accessed on June 22, 2025, https://zerogravitymarketing.com/blog/the-science-behind-rag/

- Retrieval-augmented generation – Wikipedia, accessed on June 22, 2025, https://en.wikipedia.org/wiki/Retrieval-augmented_generation

- Retrieval-Augmented Generation (RAG) – Pinecone, accessed on June 22, 2025, https://www.pinecone.io/learn/retrieval-augmented-generation/

- What is Retrieval-Augmented Generation (RAG)? – Google Cloud, accessed on June 22, 2025, https://cloud.google.com/use-cases/retrieval-augmented-generation

- RAG Cheatsheet – Eliminating Hallucinations in LLMs – The Cloud Girl, accessed on June 22, 2025, https://www.thecloudgirl.dev/blog/rag-eliminating-hallucinations-in-llms

- What is RAG (Retrieval Augmented Generation)? – IBM, accessed on June 22, 2025, https://www.ibm.com/think/topics/retrieval-augmented-generation

- Understanding RAG: Retrieval Augmented Generation Essentials for AI Projects – Apideck, accessed on June 22, 2025, https://www.apideck.com/blog/understanding-rag-retrieval-augmented-generation-essentials-for-ai-projects

- What Is RAG? Use Cases, Limitations, and Challenges – Bright Data, accessed on June 22, 2025, https://brightdata.com/blog/web-data/rag-explained

- How Retrieval-Augmented Generation Drives Enterprise AI Success, accessed on June 22, 2025, https://www.coveo.com/blog/retrieval-augmented-generation-benefits/

- 5 benefits of retrieval-augmented generation (RAG) – Merge.dev, accessed on June 22, 2025, https://www.merge.dev/blog/rag-benefits

- cloud.google.com, accessed on June 22, 2025, https://cloud.google.com/use-cases/retrieval-augmented-generation#:~:text=RAG-,What%20is%20Retrieval%2DAugmented%20Generation%20(RAG)%3F,large%20language%20models%20(LLMs).

- What is RAG? – Retrieval-Augmented Generation AI Explained – AWS, accessed on June 22, 2025, https://aws.amazon.com/what-is/retrieval-augmented-generation/

- How to Create a RAG System: A Complete Guide to Retrieval-Augmented Generation, accessed on June 22, 2025, https://www.mindee.com/blog/build-rag-system-guide

- Design and Develop a RAG Solution – Azure Architecture Center | Microsoft Learn, accessed on June 22, 2025, https://learn.microsoft.com/en-us/azure/architecture/ai-ml/guide/rag/rag-solution-design-and-evaluation-guide

- What is RAG: Understanding Retrieval-Augmented Generation …, accessed on June 22, 2025, https://qdrant.tech/articles/what-is-rag-in-ai/

- Retrieval Augmented Generation (RAG): A Complete Guide – WEKA, accessed on June 22, 2025, https://www.weka.io/learn/guide/ai-ml/retrieval-augmented-generation/

- Advanced RAG Techniques – Cazton, accessed on June 22, 2025, https://www.cazton.com/blogs/technical/advanced-rag-techniques

- Introduction to Retrieval Augmented Generation (RAG) – Redis, accessed on June 22, 2025, https://redis.io/glossary/retrieval-augmented-generation/

- [2505.08445] Optimizing Retrieval-Augmented Generation: Analysis of Hyperparameter Impact on Performance and Efficiency – arXiv, accessed on June 22, 2025, https://arxiv.org/abs/2505.08445

- Understanding RAG: 6 Steps of Retrieval Augmented Generation (RAG) – Acorn Labs, accessed on June 22, 2025, https://www.acorn.io/resources/learning-center/retrieval-augmented-generation/

- Active Retrieval-Augmented Generation – For Quicker, Better Responses – K2view, accessed on June 22, 2025, https://www.k2view.com/blog/active-retrieval-augmented-generation/

- Introduction to Retrieval Augmented Generation (RAG) – Weaviate, accessed on June 22, 2025, https://weaviate.io/blog/introduction-to-rag

- RAG (Retrieval-Augmented Generation): How It Works, Its Limitations, and Strategies for Accurate Results – Cloudkitect, accessed on June 22, 2025, https://cloudkitect.com/how-rag-works-limitations-and-strategies-for-accuracy/

- URAG: Implementing a Unified Hybrid RAG for Precise Answers in University Admission Chatbots – A Case Study at HCMUT – arXiv, accessed on June 22, 2025, https://arxiv.org/html/2501.16276v1

- Toolshed: Scale Tool-Equipped Agents with Advanced RAG-Tool Fusion and Tool Knowledge Bases – arXiv, accessed on June 22, 2025, https://arxiv.org/pdf/2410.14594

- Agentic Retrieval-Augmented Generation: A Survey on Agentic RAG – arXiv, accessed on June 22, 2025, https://arxiv.org/html/2501.09136v1

- RAG Architecture Explained: A Comprehensive Guide [2025] | Generative AI Collaboration Platform, accessed on June 22, 2025, https://orq.ai/blog/rag-architecture

- RAG vs. Fine-tuning – IBM, accessed on June 22, 2025, https://www.ibm.com/think/topics/rag-vs-fine-tuning

- RAG vs. Fine-Tuning: How to Choose – Oracle, accessed on June 22, 2025, https://www.oracle.com/artificial-intelligence/generative-ai/retrieval-augmented-generation-rag/rag-fine-tuning/

- Retrieval-Augmented Generation vs Fine-Tuning: What’s Right for You? – K2view, accessed on June 22, 2025, https://www.k2view.com/blog/retrieval-augmented-generation-vs-fine-tuning/

- RAG vs. fine-tuning: Choosing the right method for your LLM | SuperAnnotate, accessed on June 22, 2025, https://www.superannotate.com/blog/rag-vs-fine-tuning

- Fine-Tuning vs RAG: Key Differences Explained (2025 Guide) – Orq.ai, accessed on June 22, 2025, https://orq.ai/blog/finetuning-vs-rag

- Advanced RAG Techniques | DataCamp, accessed on June 22, 2025, https://www.datacamp.com/blog/rag-advanced

- Re-ranking in Retrieval Augmented Generation: How to Use Re-rankers in RAG – Chitika, accessed on June 22, 2025, https://www.chitika.com/re-ranking-in-retrieval-augmented-generation-how-to-use-re-rankers-in-rag/

- Self-Rag: Self-reflective Retrieval augmented Generation – arXiv, accessed on June 22, 2025, https://arxiv.org/html/2310.11511

- Self-RAG: Learning to Retrieve, Generate and Critique through Self-Reflection, accessed on June 22, 2025, https://selfrag.github.io/

- The 2025 Guide to Retrieval-Augmented Generation (RAG) – Eden AI, accessed on June 22, 2025, https://www.edenai.co/post/the-2025-guide-to-retrieval-augmented-generation-rag

- Corrective RAG – Learn Prompting, accessed on June 22, 2025, https://learnprompting.org/docs/retrieval_augmented_generation/corrective-rag

- Implementing Corrective RAG in the Easiest Way – LanceDB Blog, accessed on June 22, 2025, https://blog.lancedb.com/implementing-corrective-rag-in-the-easiest-way-2/

- Guide to Adaptive RAG Systems with LangGraph – Analytics Vidhya, accessed on June 22, 2025, https://www.analyticsvidhya.com/blog/2025/03/adaptive-rag-systems-with-langgraph/

- [2403.14403] Adaptive-RAG: Learning to Adapt Retrieval-Augmented Large Language Models through Question Complexity – arXiv, accessed on June 22, 2025, https://arxiv.org/abs/2403.14403

- 8 Retrieval Augmented Generation (RAG) Architectures You Should Know in 2025, accessed on June 22, 2025, https://humanloop.com/blog/rag-architectures

- Adaptive-RAG: Enhancing Large Language Models by Question-Answering Systems with Dynamic Strategy Selection for Query Complexity : r/machinelearningnews – Reddit, accessed on June 22, 2025, https://www.reddit.com/r/machinelearningnews/comments/1bs2i80/adaptiverag_enhancing_large_language_models_by/

- Retrieval-Augmented Generation (RAG): Deep Dive into 25 Different Types of RAG, accessed on June 22, 2025, https://www.marktechpost.com/2024/11/25/retrieval-augmented-generation-rag-deep-dive-into-25-different-types-of-rag/

- Advanced RAG techniques – Literal.ai, accessed on June 22, 2025, https://www.literalai.com/blog/advanced-rag-techniques

- A Comprehensive Guide to RAG Implementations – Groove Innovations, accessed on June 22, 2025, https://www.grooveinnovations.ai/post/a-comprehensive-guide-to-rag-implementations

- asinghcsu/AgenticRAG-Survey: Agentic-RAG explores … – GitHub, accessed on June 22, 2025, https://github.com/asinghcsu/AgenticRAG-Survey

- [2310.11511] Self-Rag: Self-reflective Retrieval augmented Generation – ar5iv – arXiv, accessed on June 22, 2025, https://ar5iv.labs.arxiv.org/html/2310.11511

- 10 Real-World Examples of Retrieval Augmented Generation – Signity Solutions, accessed on June 22, 2025, https://www.signitysolutions.com/blog/real-world-examples-of-retrieval-augmented-generation

- 10 RAG examples and use cases from real companies – Evidently AI, accessed on June 22, 2025, https://www.evidentlyai.com/blog/rag-examples

- Top 14 RAG Use Cases You Need to Know in … – Moon Technolabs, accessed on June 22, 2025, https://www.moontechnolabs.com/blog/rag-use-cases/

- 7 Practical Applications of RAG Models and Their Impact on Society – Hyperight, accessed on June 22, 2025, https://hyperight.com/7-practical-applications-of-rag-models-and-their-impact-on-society/

- Top Problems with RAG systems and ways to mitigate them – AIMon Labs, accessed on June 22, 2025, https://www.aimon.ai/posts/top_problems_with_rag_systems_and_ways_to_mitigate_them

- 5 challenges of using retrieval-augmented generation (RAG), accessed on June 22, 2025, https://www.merge.dev/blog/rag-challenges

- 12 RAG Pain Points and their Solutions – Analytics Vidhya, accessed on June 22, 2025, https://www.analyticsvidhya.com/blog/2024/06/rag-pain-points-and-their-solutions/

- [2505.06579] POISONCRAFT: Practical Poisoning of Retrieval-Augmented Generation for Large Language Models – arXiv, accessed on June 22, 2025, https://arxiv.org/abs/2505.06579

- [2502.06872] Towards Trustworthy Retrieval Augmented Generation for Large Language Models: A Survey – arXiv, accessed on June 22, 2025, https://arxiv.org/abs/2502.06872

- arXiv:2408.11381v2 [cs.CL] 9 Sep 2024, accessed on June 22, 2025, https://arxiv.org/pdf/2408.11381?

- Advanced RAG Techniques: Boost Accuracy & Efficiency – Chitika, accessed on June 22, 2025, https://www.chitika.com/advanced-rag-techniques-guide/

- A Study on the Implementation Method of an Agent-Based Advanced RAG System Using Graph – arXiv, accessed on June 22, 2025, https://arxiv.org/pdf/2407.19994?

- Retrieval Augmented Generation – IBM, accessed on June 22, 2025, https://www.ibm.com/architectures/patterns/genai-rag

- Retrieval Augmented Generation (RAG) for LLMs – Prompt Engineering Guide, accessed on June 22, 2025, https://www.promptingguide.ai/research/rag

- RAG 101: Demystifying Retrieval-Augmented Generation Pipelines | NVIDIA Technical Blog, accessed on June 22, 2025, https://developer.nvidia.com/blog/rag-101-demystifying-retrieval-augmented-generation-pipelines/

- RAG Pipeline Diagram: How to Augment LLMs With Your Data – Multimodal, accessed on June 22, 2025, https://www.multimodal.dev/post/rag-pipeline-diagram

- RAG and generative AI – Azure AI Search | Microsoft Learn, accessed on June 22, 2025, https://learn.microsoft.com/en-us/azure/search/retrieval-augmented-generation-overview

What is RAG (retrieval augmented generation)?

Retrieval augmented generation (RAG) is an architecture for optimizing the performance of an artificial intelligence (AI) model by connecting it with external knowledge bases. RAG helps large language models (LLMs) deliver more relevant responses at a higher quality.

Generative AI (gen AI) models are trained on large datasets and refer to this information to generate outputs. However, training datasets are finite and limited to the information the AI developer can access—public domain works, internet articles, social media content and other publicly accessible data.

RAG allows generative AI models to access additional external knowledge bases, such as internal organizational data, scholarly journals and specialized datasets. By integrating relevant information into the generation process, chatbots and other natural language processing (NLP) tools can create more accurate domain-specific content without needing further training.

What is Retrieval-Augmented Generation (RAG)?

annotated diagram of a typical RAG (Retrieval‑Augmented Generation) architecture:

The diagram emphasizes RAG’s core process:

- Index → 2. Retrieve → 3. Augment → 4. Generate

This creates a feedback loop allowing high-fidelity, up-to-date responses without need for retraining the LLM—you simply update the index. It’s why RAG is preferred for knowledge-intensive and real-time applications like legal, medical, and enterprise Q&A

- Step 1: Knowledge Ingestion

Documents (e.g., PDFs, web pages, databases) are processed offline. They’re split into smaller chunks, embedded via an embedding model, and stored in a vector database (FAISS, Pinecone, etc.) - Step 2: Query Embedding and Retrieval

At runtime, the user’s query is transformed into an embedding. This query embedding is used to search the vector database (e.g., FAISS) to find the top‑K most relevant document chunks - Step 3: Augmented Prompt Construction

The retrieved chunks are combined with the user prompt to create a contextualized input for the LLM. The template might say: “Using the following context, answer the question…” - Step 4: Generative Model

The augmented prompt is fed into a generative model (LLM). It processes both user query and retrieved content to produce a grounded, fluent answer. Optionally, it can cite the sources

What are the benefits of RAG?

RAG empowers organizations to avoid high retraining costs when adapting generative AI models to domain-specific use cases. Enterprises can use RAG to complete gaps in a machine learning model’s knowledge base so it can provide better answers.

The primary benefits of RAG include:

- Cost-efficient AI implementation and AI scaling

- Access to current domain-specific data

- Lower risk of AI hallucinations

- Increased user trust

- Expanded use cases

- Enhanced developer control and model maintenance

- Greater data security

Cost-efficient AI implementation and AI scaling

When implementing AI, most organizations first select a foundation model: the deep-learning models that serve as the basis for the development of more advanced versions. Foundation models typically have generalized knowledge bases populated with publicly available training data, such as internet content available at the time of training.

Retraining a foundation model or fine-tuning it—where a foundation model is further trained on new data in a smaller, domain-specific dataset—is computationally expensive and resource-intensive. The model adjusts some or all of its parameters to adjust its performance to the new specialized data.

With RAG, enterprises can use internal, authoritative data sources and gain similar model performance increases without retraining. Enterprises can scale their implementation of AI applications as needed while mitigating cost and resource requirement increases.

Access to current and domain-specific data

Generative AI models have a knowledge cutoff, the point at which their training data was last updated. As a model ages further past its knowledge cutoff, it loses relevance over time. RAG systems connect models with supplemental external data in real-time and incorporate up-to-date information into generated responses.

Enterprises use RAG to equip models with specific information such as proprietary customer data, authoritative research and other relevant documents.

RAG models can also connect to the internet with application programming interfaces (APIs) and gain access to real-time social media feeds and consumer reviews for a better understanding of market sentiment. Meanwhile, access to breaking news and search engines can lead to more accurate responses as models incorporate the retrieved information into the text-generation process.

Lower risk of AI hallucinations

Generative AI models such as OpenAI’s GPT work by detecting patterns in their data, then using those patterns to predict the most likely outcomes to user inputs. Sometimes models detect patterns that don’t exist. A hallucination or confabulation happens when models present incorrect or made-up information as though it is factual.

RAG anchors LLMs in specific knowledge backed by factual, authoritative and current data. Compared to a generative model operating only on its training data, RAG models tend to provide more accurate answers within the contexts of their external data. While RAG can reduce the risk of hallucinations, it cannot make a model error-proof.

Increased user trust

Chatbots, a common generative AI implementation, answer questions posed by human users. For a chatbot such as ChatGPT to be successful, users need to view its output as trustworthy. RAG models can include citations to the knowledge sources in their external data as part of their responses.

When RAG models cite their sources, human users can verify those outputs to confirm accuracy while consulting the cited works for follow-up clarification and additional information. Corporate data storage is often a complex and siloed maze. RAG responses with citations point users directly toward the materials they need.

Expanded use cases

Access to more data means that one model can handle a wider range of prompts. Enterprises can optimize models and gain more value from them by broadening their knowledge bases, in turn expanding the contexts in which those models generate reliable results.

By combining generative AI with retrieval systems, RAG models can retrieve and integrate information from multiple data sources in response to complex queries.

Enhanced developer control and model maintenance

Modern organizations constantly process massive quantities of data, from order inputs to market projections to employee turnover and more. Effective data pipeline construction and data storage is paramount for strong RAG implementation.

At the same time, developers and data scientists can tweak the data sources to which models have access at any time. Repositioning a model from one task to another becomes a task of adjusting its external knowledge sources as opposed to fine-tuning or retraining. If fine-tuning is needed, developers can prioritize that work instead of managing the model’s data sources.

Greater data security

Because RAG connects a model to external knowledge sources rather than incorporating that knowledge into the model’s training data, it maintains a divide between the model and that external knowledge. Enterprises can use RAG to preserve first-party data while simultaneously granting models access to it—access that can be revoked at any time.

However, enterprises must be vigilant to maintain the security of the external databases themselves. RAG uses vector databases, which use embeddings to convert data points to numerical representations. If these databases are breached, attackers can reverse the vector embedding process and access the original data, especially if the vector database is unencrypted.

RAG use cases

RAG systems essentially enable users to query databases with conversational language. The data-powered question-answering abilities of RAG systems have been applied across a range of use cases, including:

- Specialized chatbots and virtual assistants

- Research

- Content generation

- Market analysis and product development

- Knowledge engines

- Recommendation services

Specialized chatbots and virtual assistants

Enterprises wanting to automate customer support might find that their AI models lack the specialized knowledge needed to adequately assist customers. RAG AI systems plug models into internal data to equip customer support chatbots with the latest knowledge about a company’s products, services and policies.

The same principle applies to AI avatars and personal assistants. Connecting the underlying model with the user’s personal data and referring to previous interactions provides a more customized user experience.

Research

Able to read internal documents and interface with search engines, RAG models excel at research. Financial analysts can generate client-specific reports with up-to-date market information and prior investment activity, while medical professionals can engage with patient and institutional records.

Content generation

The ability of RAG models to cite authoritative sources can lead to more reliable content generation. While all generative AI models can hallucinate, RAG makes it easier for users to verify outputs for accuracy.

Market analysis and product development

Business leaders can consult social media trends, competitor activity, sector-relevant breaking news and other online sources to better inform business decisions. Meanwhile, product managers can reference customer feedback and user behaviors when considering future development choices.

Knowledge engines

RAG systems can empower employees with internal company information. Streamlined onboarding processes, faster HR support and on-demand guidance for employees in the field are just a few ways businesses can use RAG to enhance job performance.

Recommendation services

By analyzing previous user behavior and comparing that with current offerings, RAG systems power more accurate recommendation services. An e-commerce platform and content delivery service can both use RAG to keep customers engaged and spending.

How does RAG work?

RAG works by combining information retrieval models with generative AI models to produce more authoritative content. RAG systems query a knowledge base and add more context to a user prompt before generating a response.

Standard LLMs source information from their training datasets. RAG adds an information retrieval component to the AI workflow, gathering relevant information and feeding that to the generative AI model to enhance response quality and utility.

RAG systems follow a five-stage process:

- The user submits a prompt.

- The information retrieval model queries the knowledge base for relevant data.

- Relevant information is returned from the knowledge base to the integration layer.

- The RAG system engineers an augmented prompt to the LLM with enhanced context from the retrieved data.

- The LLM generates an output and returns an output to the user.

This process showcases how RAG gets its name. The RAG system retrieves data from the knowledge base, augments the prompt with added context and generates a response.

Components of a RAG system

RAG systems contain four primary components:

- The knowledge base: The external data repository for the system.

- The retriever: An AI model that searches the knowledge base for relevant data.

- The integration layer: The portion of the RAG architecture that coordinates its overall functioning.

- The generator: A generative AI model that creates an output based on the user query and retrieved data.

Other components might include a ranker, which ranks retrieved data based on relevance, and an output handler, which formats the generated response for the user.

The knowledge base

The first stage in constructing a RAG system is creating a queryable knowledge base. The external data repository can contain data from countless sources: PDFs, documents, guides, websites, audio files and more. Much of this will be unstructured data, which means that it hasn’t yet been labeled.

RAG systems use a process called embedding to transform data into numerical representations called vectors. The embedding model vectorizes the data in a multidimensional mathematical space, arranging the data points by similarity. Data points judged to be closer in relevance to each other are placed closely together.

Knowledge bases must be continually updated to maintain the RAG system’s quality and relevance.

LLM inputs are limited to the context window of the model: the amount of data it can process without losing context. Chunking a document into smaller sizes helps ensure that the resulting embeddings will not overwhelm the context window of the LLM in the RAG system.

Chunk size is an important hyperparameter for the RAG system. When chunks are too large, the data points can become too general and fail to correspond directly to potential user queries. But if chunks are too small, the data points can lose semantic coherency.

The retriever

Vectorizing the data prepares the knowledge base for semantic vector search, a technique that identifies points in the database that are similar to the user’s query. Semantic search machine learning algorithms can query massive databases and quickly identify relevant information, reducing latency as compared to traditional keyword searches.

The information retrieval model transforms the user’s query into an embedding and then searches the knowledge base for similar embeddings. Then, its findings are returned from the knowledge base.

The integration layer

The integration layer is the center of the RAG architecture, coordinating the processes and passing data around the network. With the added data from the knowledge base, the RAG system creates a new prompt for the LLM component. This prompt consists of the original user query plus the enhanced context returned by the retrieval model.

RAG systems employ various prompt engineering techniques to automate effective prompt creation and help the LLM return the best possible response. Meanwhile, LLM orchestration frameworks such as the open source LangChain and LlamaIndex or IBM® watsonx Orchestrate™ govern the overall functioning of an AI system.

The generator

The generator creates an output based on the augmented prompt fed to it by the integration layer. The prompt synthesizes the user input with the retrieved data and instructs the generator to consider this data in its response. Generators are typically pretrained language models, such as GPT, Claude or Llama.

What is the difference between RAG and fine-tuning?

The difference between RAG and fine-tuning is that RAG lets an LLM query an external data source while fine-tuning trains an LLM on domain-specific data. Both have the same general goal: to make an LLM perform better in a specified domain.

RAG and fine-tuning are often contrasted but can be used in tandem. Fine-tuning increases a model’s familiarity with the intended domain and output requirements, while RAG assists the model in generating relevant, high-quality outputs.

검색 증강 생성(RAG): 아키텍처, 애플리케이션 및 발전에 대한 포괄적인 기술 보고서

대규모 언어 모델에서 지식 기반의 필요성

대규모 언어 모델(LLM)의 등장은 인공지능 분야에서 혁명적인 이정표를 세웠으며, 자연어 이해 및 생성에서 놀라운 능력을 보여주었습니다. 그러나 이러한 모델에 유창함을 부여하는 바로 그 아키텍처는 근본적인 한계를 내포하고 있습니다. 즉, 지식의 정적인 특성과 사실을 꾸며내는 경향이라는 내재적 제약으로 인해, 이러한 모델을 검증 가능하고 실제적인 정보에 기반을 두게 하는 프레임워크에 대한 중요한 필요성이 대두되었습니다. 검색 증강 생성(RAG)은 이 문제에 대한 결정적인 해결책으로 부상하여, LLM을 강력하지만 때로는 신뢰할 수 없는 시스템에서 신뢰할 수 있는 엔터프라이즈급 도구로 변모시켰습니다.

파라미터 기반 지식의 한계

표준 LLM의 지식은 “파라미터 기반”인데, 이는 모델의 내부 파라미터(가중치 및 편향)에 한정된 훈련 기간 동안 전체적으로 인코딩된다는 의미입니다. 이 훈련 과정은 방대하지만 정적인 데이터셋에 의존하며, 이 데이터셋은 모델이 완성되면 시간이 멈춘 상태가 됩니다. 이는 “지식 단절”을 야기하는데, 이는 모델이 인지하지 못하는 정보의 경계선입니다. 결과적으로, 훈련이 끝난 후에 발생한 사건, 발견 또는 데이터에 대해 질문을 받으면 LLM은 근본적으로 정보에 입각한 답변을 제공할 수 없으며, 대신 오래되거나 부정확한 정보를 생성할 수 있습니다.

이러한 한계는 정보의 신선도와 관련성이 의사 결정에 가장 중요한 동적인 기업 환경에서 특히 심각합니다. 더욱이, 광범위한 공개 데이터로 훈련된 범용 LLM은 본질적으로 전문 분야에 대한 깊이가 부족하며, 비공개 또는 독점적인 조직 지식에 접근할 수 없습니다. 이 데이터는 기밀성이 높거나 너무 틈새시장이어서 일반적인 말뭉치에 포함될 수 없기 때문에 훈련 중에 접근할 수 없으며, 이로 인해 LLM은 많은 내부 비즈니스 사용 사례에서 비효율적이 됩니다.

AI 환각 현상(Hallucination)의 과제

파라미터 기반 지식 한계의 직접적인 결과는 “환각 현상”, 즉 조작(confabulation)이라고도 알려진 현상입니다. 이는 LLM이 그럴듯하게 들리고, 일관성 있으며, 자신감 있게 전달되지만 사실적으로는 부정확하거나 완전히 조작된 응답을 생성하는 경향으로 정의됩니다. 이러한 행동은 몇 가지 근본적인 원인에서 비롯됩니다. 훈련 데이터의 격차에 해당하는 질문이나 지식 단절 시점 이후의 질문에 직면했을 때, 모델은 학습된 패턴에서 추론을 시도하며, 본질적으로 사실적 진실보다는 언어적 그럴듯함을 우선시하는 교육된 추측을 합니다. 핵심적으로 LLM은 진실을 찾는 엔진이 아니라 패턴 예측 엔진입니다. 외부의 실시간 소스에 대해 자신의 출력을 사실 확인하는 내재된 메커니즘이 부족합니다.

일상적인 상황에서는 재미있을 수 있지만, 환각 현상은 전문적인 환경에서 심각한 책임 문제를 야기합니다. 이 문제의 프레임은 순전히 기술적인 한계에서 중요한 비즈니스 리스크로 발전했습니다. 학술 문헌에서 환각 현상은 종종 모델 결함으로 설명됩니다. 그러나 기업 및 산업 맥락에서는 이 결함의 심각한 결과, 즉 잘못된 정보의 확산, 민감한 데이터 패턴의 잠재적 유출 및 그에 따른 평판 손상에 초점이 맞춰집니다. 의료, 금융, 법률 서비스와 같은 “돈 또는 생명(Your Money or Your Life, YMYL)”이라는 고위험 분야에서는 단 하나의 환각적인 응답이 끔찍한 결과를 초래할 수 있습니다. 환각 현상을 “버그”에서 “리스크”로 재정의하는 것은 RAG가 빠르게 채택된 전략적 필요성을 설명합니다. RAG는 단순히 성능을 향상시키는 도구가 아니라, AI 생성 콘텐츠가 검증 가능한 소스로 추적될 수 있도록 보장함으로써 거버넌스, 감사 가능성 및 리스크 완화를 위한 메커니즘을 제공하는 책임감 있는 AI의 기본 구성 요소입니다.

검색 증강 생성(RAG) 소개: 해결 프레임워크

검색 증강 생성은 이러한 근본적인 LLM의 한계를 해결하기 위해 특별히 설계된 AI 프레임워크입니다. RAG의 핵심 원칙은 LLM의 강력하고 내재적인 파라미터 기반 지식과 외부 지식 베이스에 저장된 방대하고 동적이며 비-파라미터적인 정보를 시너지 효과를 내어 결합하는 것입니다. 이 프레임워크는 LLM의 프로세스를 재조정하여 작동합니다. RAG 시스템은 내부 메모리에서 즉시 응답을 생성하는 대신, 지정된 외부 데이터 소스에서 관련성 있고 최신이며 신뢰할 수 있는 정보를 먼저 검색합니다. 그런 다음 이 검색된 컨텍스트가 원래의 쿼리와 함께 LLM에 제공되어, 모델의 후속 출력을 검증 가능한 사실에 “기반”하게 합니다. 이 패러다임 전환은 LLM 생성 콘텐츠의 정확성, 신뢰성 및 적시성을 향상시켜, 지식 집약적 작업에 있어 더 신뢰할 수 있고 가치 있는 자산으로 변모시킵니다.

검색 증강 생성 시스템의 구조

RAG 시스템은 단일체가 아니라 오프라인과 온라인 단계로 구성된 정교한 파이프라인입니다. 오프라인 단계는 효율적인 검색을 위해 지식 베이스를 준비하는 과정이며, 온라인 단계는 사용자의 쿼리에 실시간으로 실행되어 답변합니다. 이 구조를 이해하는 것은 강력한 RAG 애플리케이션을 구축하고 최적화하는 데 중요합니다.

아키텍처 개요 및 다이어그램

RAG 패턴은 두 가지 주요 프로세스로 구성됩니다: 데이터를 준비하기 위해 오프라인에서 발생하는 “수집” 또는 “인덱싱” 프로세스와 사용자가 질문할 때 실시간으로 발생하는 “추론” 또는 “쿼리” 프로세스입니다. 다음 다이어그램은 전체 엔드투엔드 아키텍처를 보여줍니다.

Code snippet

graph TD

subgraph "오프라인: 데이터 수집 및 인덱싱 파이프라인"

A(데이터 소스) --> B(로드 및 청크);

B --> C{임베딩 모델};

C --> D(벡터 데이터베이스);

end

subgraph "온라인: 실시간 추론 파이프라인"

E[사용자 쿼리] --> F{임베딩 모델};

F --> G(유사도 검색);

D --> G;

G --> H(증강된 프롬프트 <br>);

H --> I{LLM <br> (생성기)};

I --> J(생성된 응답);

end

style A fill:#D6EAF8,stroke:#333,stroke-width:2px

style D fill:#D1F2EB,stroke:#333,stroke-width:2px

style E fill:#FEF9E7,stroke:#333,stroke-width:2px

style J fill:#E8F8F5,stroke:#333,stroke-width:2px

아키텍처 흐름 설명:

- 데이터 수집 (오프라인): 이 프로세스는 문서 저장소, 데이터베이스 또는 API와 같은 다양한 외부 위치에서 데이터를 소싱하는 것으로 시작됩니다. 이 데이터는 로드, 정리되고 의미적으로 일관된 더 작은 “청크”로 나뉩니다. 각 청크는 임베딩 모델에 의해 처리되어 텍스트를 숫자 벡터 표현으로 변환합니다. 이러한 벡터 임베딩은 특화된 벡터 데이터베이스에 저장 및 인덱싱되어 검색 가능한 지식 라이브러리를 생성합니다.

- 추론 (온라인): 사용자가 쿼리를 제출하면 실시간 파이프라인이 활성화됩니다. 사용자의 쿼리는 수집 단계에서 사용된 것과 동일한 모델을 사용하여 벡터 임베딩으로 변환됩니다. 그런 다음 시스템은 벡터 데이터베이스에서 유사도 검색을 수행하여 쿼리와 의미적으로 가장 관련성이 높은 인덱싱된 데이터 청크를 찾습니다. 이 검색된 정보는 원래 사용자 쿼리와 결합되어 “증강된 프롬프트”를 생성합니다. 이제 질문과 사실적 컨텍스트를 모두 포함하는 이 풍부한 프롬프트는 LLM(생성기)으로 전송되어 정보를 종합하여 최종적으로 사실에 기반한 응답을 생성합니다.

데이터 수집 및 인덱싱 파이프라인 (“오프라인” 단계)

RAG 시스템이 질문에 답하기 전에, 외부 지식은 세심하게 준비되고 인덱싱되어야 합니다. 이 프로세스는 시스템의 궁극적인 성능에 기초가 됩니다.

- 문서 로딩 및 전처리: 이 프로세스는 문서 저장소, 데이터베이스 또는 API를 포함할 수 있는 다양한 위치에서 데이터를 소싱하는 것으로 시작됩니다. 이 원시 데이터는 전처리되며, 이 정리 단계에는 불용어 제거, 텍스트 정규화 및 중복 정보 제거가 포함될 수 있어 지식 베이스의 품질을 보장합니다.

- 청킹 전략: LLM은 유한한 컨텍스트 창을 가지고 있고 집중된 텍스트 조각에 대해 더 효과적으로 작동하기 때문에, 큰 문서는 더 작고 의미적으로 일관된 “청크”로 분할되어야 합니다. 청킹 전략의 선택은 중요한 설계 결정입니다. 일반적인 접근 방식에는 고정 크기 청킹(예: 256 토큰마다), 문장 기반 청킹 또는 문서의 논리적 구조를 존중하는 더 진보된 사용자 지정 방법(예: 섹션 또는 단락에서 나누기)이 포함됩니다.

- 임베딩 생성: 각 텍스트 청크는 BERT와 같은 트랜с포머 기반 모델인 임베딩 모델을 통과합니다. 이 모델은 텍스트를 고차원 숫자 벡터, 즉 벡터 임베딩으로 변환합니다. 이 임베딩은 청크의 의미적 의미를 포착하여 시스템이 단순한 키워드 일치가 아닌 개념적 유사성을 기반으로 정보를 검색할 수 있도록 합니다.

- 벡터 데이터베이스 및 인덱싱: 생성된 벡터 임베딩은 특화된 벡터 데이터베이스에 저장 및 인덱싱됩니다. Faiss, Qdrant 또는 ChromaDB와 같은 이러한 데이터베이스는 효율적인 벡터 유사도 검색을 수행하는 데 고도로 최적화되어 있어 시스템이 사용자의 쿼리와 의미적으로 가장 가까운 청크를 신속하게 찾을 수 있습니다.

핵심 RAG 워크플로우: 쿼리에서 응답까지 (“온라인” 단계)