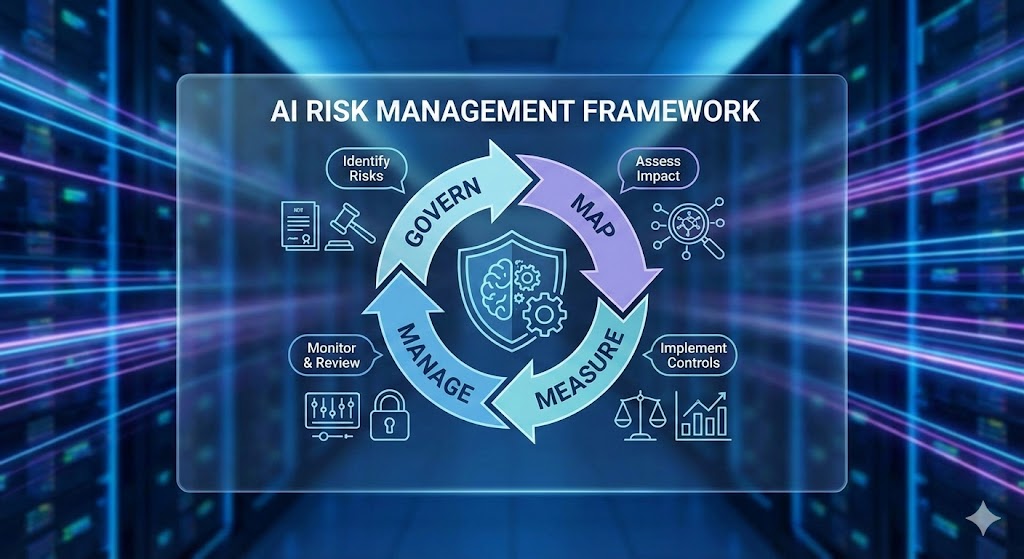

AI 리스크관리 Framework

AI 리스크관리 Framework AI 리스크관리 Framework 개요 및 대상 9대 리스크 등급 분류 관리 프로세스 거버넌스 안정적 AI 활용을 위한 리스크 통제 체계 AI 활용 전 과정에서 발생 가능한 리스크를 선제적으로 식별·평가·통제하여 금융 서비스의 신뢰성과 안정성을 확보합니다. 목적 리스크 선제적 식별 및 통제를 통한 안정적 활용 기반 확보 적용 범위 내부 개발 AI, 외부/클라우드 AI, […]

AI 리스크관리 Framework Read More »